MS Sharepoint 2010 – Administrator’s Companion

Over 90% of the Sharepoint Configuration and contents are stored in SQL server: lists, libraries, farm config, central admin info, service application info, search info, web app info, logginging info, reporting services, global content types, global metadata, info management policy info.

Sharepoint 201 supports (1) SQL 2005 and (2) SQL 2008 (and R2). Must be 64bit.

What is a SQL server instance? Three primary components:

(1) relation database engine

(2) system database

(3) user database

After you install SQL server and during a complete install of SP 2010, two user databases are created: (1) Sharepoint_Config and (2) Sharepoint_AdminContent.

Default SQL server instance – the first SQL server installation.

Named Instance – The one after 1st instance is created. With Named instance, you install another copy of the SQL server engine and the SQL server agent services. For named instance, the name of the SQL services become MSSQL$NameOfInstance and SQLAgent$NameOfService.

Multiple SQL instances give you the ability to have different SQL server settings on each instance. E.g., one instance can use only Windows Integrated Security, and another can use mixed security.

SQL Browser – listens on UDP port 1434 and directs the connection to the proper dynamically chosen TCP/IP port for each named instance.

To identify the named instance, use this format: NameOfComputer\NameOfInstance, for example, App01\Sharepoint

Four system databases are created for each SQL instance – Master, MSDB, Model and Tempdb

Sharepoint User Databases: at least (1) Farm Configuration DB, (2) Central Admin DB and (3) Company Portal database.

If you choose to install Sharepoint 2010 Search, 3 additional user database arw added: (1) Search Service Admin DB, (2) Search Service Crawl DB and (3) Search Metadata DB.

Others: Usage and health Data Collection service Application, which uses Logging Database. Etc.etc.

Windows PowerShell for SQL Server 2008

SQL server 2008 introduced a set of Windows Powershell SQL server snap-ins that expose SQL server functionality in Powershell. You can use powershell scripts to work with all SQL server objects. These scripts can be runin Windows Powershell prompt, in SQL Server Management Studio and SQL Server agent jobs.

The following components were installed:

(1) Windows Powershell 1.0

(2) SQL server snap-ins

(3) sqlps utility, which is used to run Windows Powershell sessions that include the SQL server snap-ins.

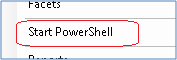

In SQL Management Studio, right click any object, and you can envoke SQL Powershell session:

Installing and Configuring SQL server 2008 for Sharepoint 2010 —

[SQL Server Express Edition] is the SQL server edition installed if you choose to perform a [Basic Sharepoint 2010] installation.

Optomizing SQL server performance —

(1) SQL Server memory Settings – SQL server has two uses for memory (1) buffer/data cache — for caching data — and (2) procedure cache – for caching stored proc and execution plans. To see if you have enough memory/RAM: Check these two ratios:

<1> Buffer Manager – Buffer Cache Hit Ratio: Percentage of pages found in DATA buffer without having to read from IO

<2> Cache Manager – Cache Hit Ratio: Percentage of pages found in the Procedure cache without having to read from IO.

To configure SQL server memory usage: Right click the SQL instance, à Property à Memory:

Database files locations: Do not use the default file location.

Options:

(1) Network Attached Storage (NAS) – not the most popular

(2) Storage Area network (SAN) —

(3) Direct Attached Storage (DAS) —

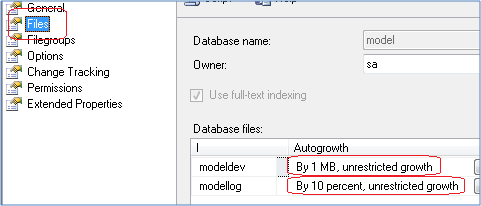

Model Database Settings —

Every Sharepoint database that you create inherits most of its database settings from the system DB called Model,

such as initial size of primary data file, initial size of transaction log, recovery model etc. Problems – the default initial size is small, the autogroth is small, and the file path is the default path in C:. So it is important to modify the Model DB property after Sharepoint installation.

SQL DB – Auto Shrink – do this only if you’re sure that the database won’t grow to omuch. This will free up some DB space. Good for the development environment. Auto-Shrink is resource-intensive, and runs every 30 minutes. It will perform only when the DB has more than 25% of free space

in it.

T-SQL: To shink a DB called UserDB to size of its data + 10%

DBCC ShrinkDatabase(UserDB, 10);

GO;

TO Shrink a DB file (DataFile1) in DB UserDB to 7MB

Use UserDB;

GO;

DBCC ShrinkFile(DataFile1, 7)

GO;

Pre-Creating

Your Sharepoint Content Database —

You can pre-create content database and then point to this DB from insode Sharepoint. Advantage: all the Model database settings are inherited, including the Autogrowth that is not inherited when you create your content databases from Sharepoint. Disadvantage: the default collation setting (SQL_Latin1_general_CP1_CI_AS) is not compatible with the required Sharepoint database collation type, so need to change it to Latin1_General_CI_AS_KS_WS. (case insensitive, accent sensitive, kana sensitive and width sensitive)

settings to check for all Sharepoint Databases:

SQL Server instance:

(1) Data and transaction log files default location: Locate on a RAID array or a different drive than Data/transaction log; do not locate on OS drive.

(2) minimum server memory:

(3) max server memory:

Sharepoint Database:

(1) Collation: Must be Latin1_General_CI_AS_KS_WS

(2) Recovery Model: Set to FULL for content DB’s.

(3) Trans log file size: 25% of the data file’s initial size.

etc. etc. etc.

TempDB Database settings —

(1) Place tempDB on the fastest IO system available;

(2) Create multiple data files to maximize disk bandwidth.

(3) Put the tempdb database on disks other than those used by your content databases.

(4) Set the recovery model to SIMPLE. (default)

Sharepoint Content Database Recovery Model —

3 recovery models:

(1) Full – retians all data in transaction log after writing it to the data file

(2) Bulk-logged – retains entries made in transaction log when bulk transactions occur.

(3) Simple – Purges all transactions from transaction log after writing them tho a data file.

What is transaction log?

A transaction log is instrumental in maintaining data integrity within that DB. The transaction log receives the following types of SQL server actions, called transactions.

(1) New information added (insert)

(2) Existing information changed (updated)

(3) Existing information deleted (Delete)

When you add a document, insert or update a list, the action is first written to the content database’s transaction log. After a period of time (60 seconds), SQL server runs a process called checkpoint. The checkpoint reads the inserts, updates, and deletes stored in the transaction log and applies them to the data files for that database.

After that, for simple Model, the transaction log file entries are removed; for FULL model, it is preserved.

Full Recovery Model —

Is the default model. The transaction log only get purged when you issue the command BACKUP LOG. To prevent the transaction log from growing too large, schedule a transaction log back up after a full database backup.

Simple Recovery Model —

Mostly for dev environment; disadvantage is that it does not allow you to backup the transaction log. No recovery is possible.

Verifying data integrity using DBCC CHECKDB – (DBCC – Database Console Command)

Run this command regularly to verify logocal and physical integrity

DBCC CHECKDB REPAIR_REBUILD

Run this to check the consistency of the disk space allocation structures:

DBCC CHECKALLOC

Runthis to check the integrity of all pages on every table and view

DBCC CHECKTABLE

Optomizing Outside of SQL Server —

Use 64-KB allocation unit size for data, transaction log and tempDB.

Monitoring SQL I/O with SQLIO.exe —

Not limited to SQL!!!! It can be used to determine the I/O capacity of any storage configuration.